Exploring Machine Learning on AWS

In the realm of machine learning (ML), Amazon Web Services (AWS) has established itself as a powerhouse, offering a robust suite of tools and services to enable developers and data scientists to build, train, and deploy ML models at scale. From popular frameworks like TensorFlow and PyTorch to cutting-edge services like Amazon SageMaker and AWS Bedrock, AWS provides a comprehensive ecosystem for development. In this article, we will delve into Machine Learning on AWS, the key frameworks and services offered, highlighting AWS features, benefits, and code example.

Frameworks for Machine Learning on AWS

Amazon SageMaker is a fully managed service that provides every developer and data scientist with the ability to build, train, and deploy ML models quickly. It supports popular ML frameworks such as TensorFlow, PyTorch, and Apache MXNet, allowing users to choose the framework that best suits their needs.

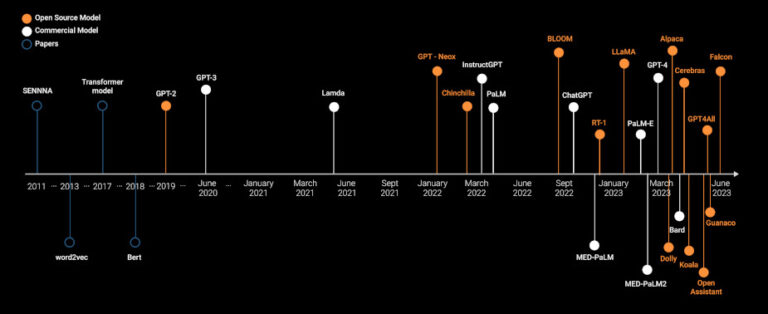

TensorFlow and PyTorch are two of the most widely used open-source frameworks for ML. Both frameworks are supported on AWS, offering users flexibility and choice in their ML development.

Hugging Face Transformers is a popular library for natural language processing (NLP) tasks. It provides pre-trained models and state-of-the-art architectures that can be easily integrated into AWS environments for NLP applications.

Apache MXNet is an open-source deep learning framework that is highly scalable and efficient. It is optimized for distributed computing and is well-suited for training ML models on large datasets.

AWS ML Services

AWS Bedrock: A comprehensive framework for building, training, and deploying ML models at scale. It provides a suite of tools and services that streamline the ML development process, making it easier for developers to create high-quality models.

Elastic Kubernetes Service (EKS) and Elastic Container Service (ECS): Container orchestration services that allow users to deploy, manage, and scale containerized applications. These services are ideal for clustering ML workloads and providing the scalability and flexibility required for ML applications.

EC2: offers scalable cloud computing, enabling users to run ML tasks on virtual servers tailored for different needs like general-purpose, memory-optimized, or GPU instances.

Lex, Rekognition, Transcribe: AWS offers a range of AI services that can be integrated into ML workflows. Amazon Lex is a service for building conversational interfaces, Amazon Rekognition is a service for image and video analysis, and Amazon Transcribe is a service for converting speech to text. These services enable developers to add advanced AI capabilities to their applications with minimal effort.

Lambda, S3 and DynamoDB: Create function that trigger Bucket events and stream new record data to perform Machine Learning on AWS Tasks.

Code Example: Lambda Python Function, Triggers S3 Bucket event and Streams DynamoDB to ML Task

import json

import boto3

from io import BytesIO

import pandas as pd

from sklearn.externals import joblib

s3_client = boto3.client('s3')

dynamodb_client = boto3.client('dynamodb')

def lambda_handler(event, context):

for record in event['Records']:

if record['eventSource'] == 'aws:s3' and record['eventName'].startswith('ObjectCreated'):

# Process data from S3

bucket_name = record['s3']['bucket']['name']

object_key = record['s3']['object']['key']

response = s3_client.get_object(Bucket=bucket_name, Key=object_key)

data = pd.read_csv(BytesIO(response['Body'].read()))

# Load pre-trained model

model = joblib.load('/path/to/pretrained_model.pkl')

# Make predictions

predictions = model.predict(data)

# Do something with predictions (e.g., save to DynamoDB)

# dynamodb_client.put_item(...)

elif record['eventSource'] == 'aws:dynamodb' and record['eventName'] == 'INSERT':

# Process data from DynamoDB

for item in record['dynamodb']['NewImage']:

# Do something with the new item (e.g., use it as input for ML)

return {

'statusCode': 200,

'body': json.dumps('ML tasks completed successfully')

}This Lambda function can be configured to trigger on S3 bucket events (ObjectCreated) and DynamoDB stream events (INSERT), allowing you to perform machine learning tasks such as data preprocessing, inference, and prediction based on the events triggered.

Machine learning on AWS offers a powerful combination of frameworks and services that enable developers and data scientists to build, train, and deploy ML models with ease. Whether you are a seasoned ML practitioner or just getting started with ML, AWS provides the tools and infrastructure you need to take your ML projects to the next level.