Local Setup for LLM Testing using a Simple Webpage

In the rapidly evolving field of AI and natural language processing, developers often need a local environment to test and deploy models. Setting up a local Linux distribution (Distro) can provide a stable and flexible platform for this purpose. This article will guide you through the detailed setup of a headless Linux Distro and configuration for LLM testing with a simple webpage interface.

Setting up the Linux Distro

Firstly, choose a Linux Distro that suits your needs. Popular choices include Ubuntu/Debian, RHEL/CentOS, Arch/Artix. No matter what distro you prefer, it is up to you. In this example, we use Ubuntu 22.04LTS Server Headless.

Update and upgrade your system: sudo apt update && sudo apt upgrade -y

Install the Apache2 web server: sudo apt install apache2

Optional allow SSH and Apache2 for ufw: ufw allow 22 80 443

Installing Ollama and LLM Testing Models

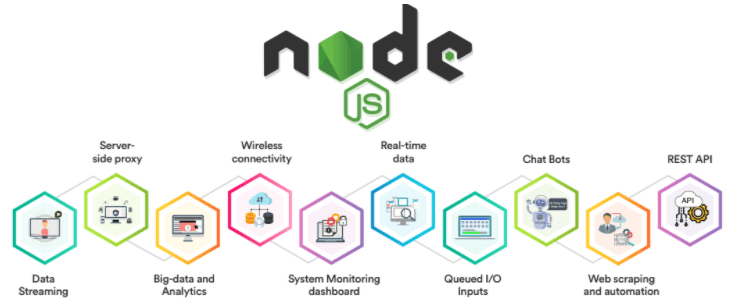

Next, we’ll install Ollama and test some models. Ollama provides various models for different tasks. Here’s how you can install Ollama and pull the gemma:2b a 1.6GB model by Google. Ollama auto-detects hardware with support for x86 and ARM Processors. GPU installation supports both Nvidia Cuda and AMD graphics cards for model acceleration.

Install Ollama and gemma:2b model using the following command: curl https://ollama.ai/install.sh | sh ollama pull gemma:2b

Test Ollama API is running: http://localhost:11434

To pull other models like, change the pull command and html code to reflect the change.

- Llama 2: A 7B model with 3.8GB size. Command:

ollama run llama2 - Mistral: A 7B model with 4.1GB size. Command:

ollama run mistral - Dolphin Phi: A 2.7B model with 1.6GB size. Command:

ollama run dolphin-phi - Phi-2: A 2.7B model with 1.7GB size. Command:

ollama run phi - ARM devices like Raspberry Pi, small models like Tinyllama:

ollama run tinyllama

Creating a Simple Webpage User Interface

To interact with Ollama models you pull through a basic webpage, follow these steps to create a simple HTML, and JavaScript interface. Other solutions exist like Web-UI and GPT4ALL, but for this demonstration we will create our own webpage using the Ollama API.

Create an HTML file (e.g., index.html) with the following content in the Apache2 default location.sudo nano /var/www/html/index.html paste code and Ctrl-X then press Y to save the file.

<!DOCTYPE html>

<html>

<head>

<title>LLM Interface</title>

</head>

<body>

<div class="container">

<h1>LLM User Interface</h1>

<textarea id="input" rows="20" cols="20" placeholder="Enter text..."></textarea><br>

Select a Model: <select id="model">

<option value="llama2">gemma:2b</option>

</select><br>

<button onclick="callAPI()">Submit</button><br>

<textarea id="output" rows="20" cols="20" readonly placeholder="Output will appear here..."></textarea><br>

</div>

<script src="script.js"></script>

</body>

</html>Create a JavaScript file (e.g., script.js) with the following content in the Apache2 default location.sudo nano /var/www/html/script.js paste code and Ctrl-X then press Y to save the file.

function callAPI() {

var inputText = document.getElementById('input').value;

var selectedModel = document.getElementById('model').value || 'gemma:2b'; // Default to 'gemma:2b' if no model selected

fetch('http://localhost:11434/api/generate', {

method: 'POST',

headers: {

'Content-Type': 'application/json'

},

body: JSON.stringify({

model: selectedModel,

prompt: inputText,

raw: true,

stream: false

})

})

.then(response => response.json())

.then(data => {

document.getElementById('output').value = data.response;

})

.catch(error => console.error('Error:', error));

}To view the LLM Interface, open your preferred web browser (such as Firefox or Chrome) and enter the IP address or localhost in the address box. Depending on your hardware specs and complexity of your inquiry, LLM testing output text can take time to appear.

Testing local setups for LLM models can give you a strong and adaptable environment for developing AI. To evaluate accuracy and speed with your own data, you can generate the verbose output required by building a local chatbot webpage using industry-leading LLM models. Trying to get your models to perform better begins with testing and training. With an application such as AutoGEN, where models trained on your data may provide the power of an AI Think Tank, accuracy and speed are critical.

- Experiment with different LLM testing to see which one best suits your needs.

- Consider using OllamaHub and Hugging Face for additional methods of testing and deploying models.

- Stay updated with the latest developments in AI and natural language processing to enhance your projects with BHPSC